Refactoring SQL: making legacy code modular

What happens when you need to make a traditional SQL script modular?

Part of the “Mastering dbt” series. Access to the full Study Guide. Let’s connect on LinkedIn!

Notes from this guide.

When working with dbt in real life, you may find yourself having to transition from a traditional approach - long SQL scripts and stored procedures - to a modular one using dbt.

This happens in 6 steps:

Migrate your existing SQL code

Implement dbt sources

Choose a refactoring strategy

Implement CTE groupings and cosmetic cleanup

Apply standardised layering

Audit the output

1. Migrate your existing SQL code

The first step is to introduce your legacy code into dbt.

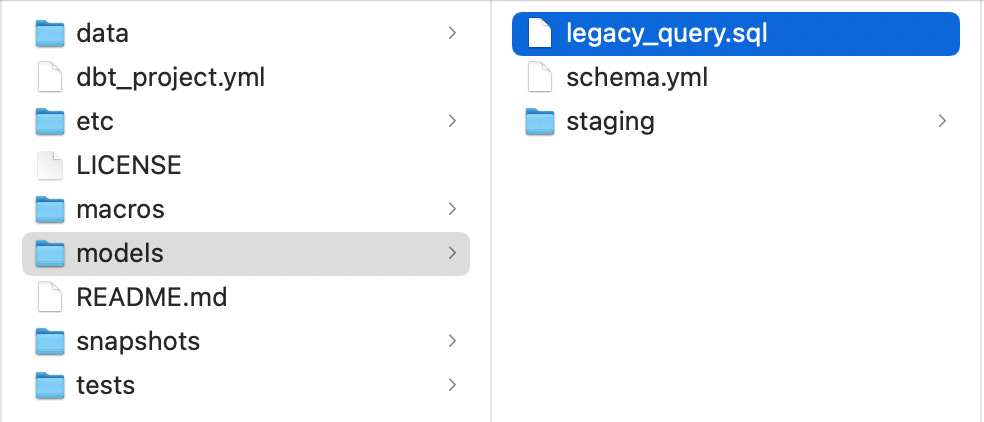

Add a legacy folder under models and paste your legacy code into a file under it. If you are also moving warehouses, you may also have the additional task of changing the SQL flavour of your code.

Once your code is ready, dbt run the file.

In this step, you don’t change the logic. If you do it now, auditing the refactoring may become confusing. Just adapt the existing logic, and add new transformations after refactoring has been audited.

2. Implement dbt sources

In this step, we replace the hardcoded file references (my_database.my_schema.my_table) with source functions.

We’ve covered Sources and why we love them: they allow us to report on source freshness and establish clear dependencies. They also make changing a schema or database a breeze, as we only need to change the sources.yml file rather than making manual changes throughout our scripts.

So, we start by creating our sources.yml file. It’s recommended to separate your sources into different folders if they come from different systems.

Then, replace any direct references with the source function ({{ src(’my_source’, ‘my_table’) }}) and dbt run the file to test if it worked.

3. Choose a refactoring strategy

There are two strategies:

In-place refactoring:

You move the legacy file into the marts folder and work directly on it.

By doing this, you won’t have any models to delete once refactoring is done.

However, any dashboards referencing this model will break while you refactor the code. Also, to review the code changes, you’d need to navigate through Git commits, and auditing the output would be more complex since the original file is overwritten.

Alongside refactoring:

You copy the legacy file into a marts folder and refactor on top of that copy.

This way, anything that references the legacy model will continue to work while you work on the changes. Also, you can do the work in smaller PRs, and you can audit your changes in the dev environment.

The downside is that you will have old files in your project while you refactor them. This can be complex, especially if you are migrating several files.

4. Implement CTE groupings and cosmetic cleanup

In this step, we’ll do a cosmetic cleanup of the code so we understand better its different parts. Then, we will start moving chunks of code to their dedicated groupings.

Cosmetic cleanup

Here, we are looking at the code superficially and just applying best practices and conventions. This could be:

adding whitespace

breaking up long lines of code

lowercase SQL

This will help us with the next step.

Implement CTE groupings

Now, we are untangling the code by identifying source pulls, undoing nested queries, and turning it all into one final select statement.

To do that, we are moving chunks of code into 4 different categories:

1. Import CTEs

These are the CTEs that simply pull the source data. This would be a “select * from {{ src(’my_source’, ‘my_table’) }}”. At most, you can filter the data here, if necessary.

Go through the code and identify the references to source data. Create one CTE for each source and then replace these references with the respective CTE name.

2. Logic CTEs

Now, we are going to identify the chunks of code that contain logical transformations. Start from the innermost queries and move any nested selects into their own dedicated CTE, always replacing them with the CTE name in the original code.

Keep doing this until you end up with only one select statement in the original code pulling from all the CTEs that you created above.

3. Final CTEs

The remaining select statement will be your final CTE. This is the final product of the model.

4. Simple SELECT statement

Finally, add the “select * from final” statement.

5. Apply standardised layering

For this step, we are identifying the parts of the code that will be part of a staging, intermediate, or marts model. We reviewed the concepts of each layer previously.

We’ll separate the CTEs into further groupings of staging, intermediate, and marts models.

Two things to note in this step:

dbt gives the guidelines below to layer your code. However, as code differs in complexity and goals, ultimately, what you need to keep in mind is that your code needs to follow modularity principles. What you’ll need to do to achieve that may vary from script to script.

during refactoring, you may run into incorrect logic. Fixing it at this step may make auditing confusing. You can choose to fix it now and keep that in mind during the audit, or make a note to address this once refactoring is done.

Staging

For staging models, identify the transformations to source data that can occur without joining them to each other. These transformations can be in different CTEs, so this may involve merging two CTEs into one.

For example, this could involve putting all column renames for the same source together into one CTE early on. Make sure you change the column names down the line, as needed.

You can do the same with cleaning transformations. The idea is to ensure modularity, that is, no repetitive code and moving staging transformations early in the code.

Once you’re happy that you’ve isolated all your staging transformations, you can move them to a separate stg file in the staging folder and update the references in the file you’re working on.

Intermediate and marts

With the remaining logic, you can identify any reusable pieces of code and move them into intermediate models, always replacing the references in the original accordingly.

Intermediate models are not always needed. You can see what types of transformations would go into them here.

Everything else would be a fact model and can be moved to fct files, separating pieces of code in a way that makes it more easily understandable.

6. Audit the output

Once we’re happy with the new models, make sure to dbt run them all so they’re ready for auditing.

dbt recommends using the audit_helper package to perform your audit. It comes with several functionalities that help you compare two models.