Data modeling techniques for more modularity

Modularity organises the work and makes data more reliable. This is essential to build trust and credibility internally.

Notes from this dbt blog post as part of the “Study for the dbt Analytics Engineering Certification with me” series. Access to the full Study Guide.

Why move to a modularity approach and how?

As an experienced analyst, I know that once you present dirty/incorrect data once, it can be hard to get your stakeholders to listen to you again.

Modularity stops data professionals from putting out the same fires repeatedly and makes code comprehensible to anyone who accesses it, which is helpful in case of staff movement.

A move from:

Scripts with thousands of lines with the same cleaning/preparing steps being applied to source data repeatedly.

Complex stored procedures

Untraceable changes

To:

Separate models with their own specific steps: clean + prepare (staging models) + present (dimensions & facts) – you start from foundation work (clean/prepare) rather than from raw data

Model access control with granularity

Version-controlled data transformation

What makes for good modularity

Consistently defined and named

Easy to interpret (DRY)

Straightforward to debug and optimise

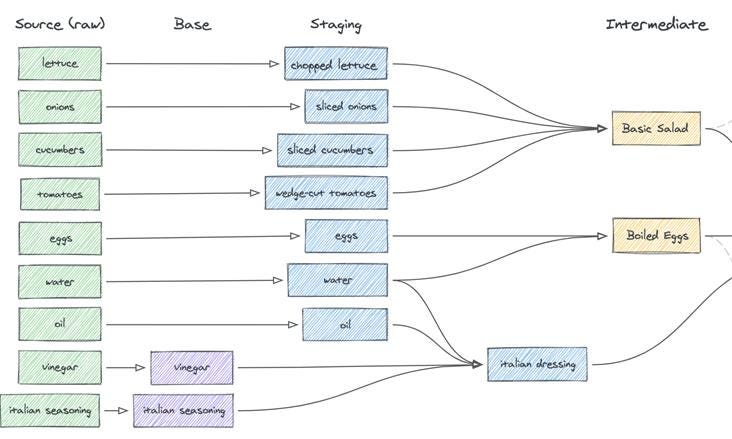

Modular DAG: sources –> base (optional) –> staging –> intermediate (optional) -> facts & dimensions

Analysts pull from facts & dimensions (orange) models – already cleaned and prepped – to manipulate the data and perform their analysis. In other cases, the data team can create an extra step to generate tables to answer business questions. In each case, it is ensured that the team will be working with clean data with the right logic applied.

3 tenets of the modular framework

naming conventions

readability

ease of debugging + optimization.

1. Naming conventions

Standard naming conventions avoid duplicate work and clearly define the role and status of a model. This, combined with an effective peer review process, ensures a streamlined process. Conventions may differ from company to company, but it’s important to have one.

Model convention at dbt Labs: the shortest path between source and end-user data.

Base models (optional):

Definition: When models need extra steps before they are ready to become a resource for marts (staging). For example, when two sources need to be cleaned and subsequently joined, as they are not going to be used on their own. The base model will prevent these two steps from being done in the same model to ensure a layer of defence in case changes are needed.

Convention: base_sourcename

Staging models:

Definition: prepares data for business logic. This layer generates clean and standardised resources to prevent repetitive work in the data marts.

Typically, one-to-one reflections of source: one stg model for every source

Applies transformations (type casting, renaming columns, filtering out unnecessary data, etc.) to the data so it is ready for business logic.

Every staging model should be ready for use at the data marts stage without further preparation being necessary. Think about ways to avoid repetitive operations at the data mart stage.

Convention: stg_sourcename

Intermediate models (optional):

Definition: splits complicated logic into separate models for readability and avoiding repetitive work (like joining stg models).

Convention: int_stgmodelname

Example model:

Vinegar and Italian seasoning (purple) will never be used on their own, so there are two initial steps needed before they can be a resource for marts: 1) cleaning & standardisation and 2) join. These two steps shouldn’t be performed in the same model, hence the need for a base layer (clean & prep) before they get joined at the stg layer to serve as a resource for marts.

Basic salad and Boiled eggs (yellow) are added as intermediate models to include operations that would be necessary for multiple marts. You don’t do these operations at the stg stage because you want to avoid clean & prep + operations to be done at the same layer.

Data mart models:

Definition: models that perform heavier transformations to apply business logic to the cleaned data. Pull from stg models and become resources for analysis.

Dimension and facts tables

Transformations: joins of stg models, case when, window functions, etc.

Convention: fct_martname_description (for fact tables) or dim_ martname_description (for dimension tables)

Where transformation stops and analysis begins

This will depend on the end-user and the analysis tool used.

Your final models may need to be ready for analysis in the form of wide tables if your end-user and/or analysis tool don’t have the capability/skills to process fact + dim tables. In this case, you can have an additional report (rpt_) layer in your project.

However, analysis tools are usually more versatile when it comes to making final manipulations to the data to answer specific questions. Also, you may not know every business question that may come up in the future at the modelling stage. Therefore, if it’s easier to leave final processing in the hands of the end-user, then it is also an option to stop at the data mart stage and leave the rest of the work to the analysis tool.

2. Data readability

Generally, it’s best to keep models within the 100-line limit of code for easy readability.

Macros and packages can help with that as they invoke blocks of SQL code while taking up the space of a few lines. You can also write your own macros to avoid repetitive coding.

3. Data model optimization and debugging

When data is built modularly, any fixes necessary at a given part of the project will subsequently run downstream.

Optimizing modelnecks

Modelnecks are models that are significantly increasing the run time of the pipeline.

Identifying these and optimising them becomes easier when the data is built modularly.

For example, you can create surrogate keys to simplify joins. Or add incremental materialisations to avoid unnecessary full refreshes.